Google has for a while has been holding a Google Webmaster Central Hangout on a weekly basis. The aim is to provide “you” an opportunity to ask questions and give feedback to Google about search related issues.

If you don’t have time to watch the hour long video you can check out our transcript of it below. We have removed all the superfluous conversation, and where possible expanded on the various topics by adding commentary and other references for further reading. You will also see time references next to each Question title so that you can move to the relevant part of the video should you wish.

In order to assist in finding questions that of most interest to you can find a summary of all the questions below (with internal links to take you to the relevant part of the page):

- Question 1: 0:41 - Why do Indexed URLs in Sitemap Fluctuate in Webmaster tools, and are there any plans to indicate which URLs are not indexed?

- Questions 2: 2:47 - What new stuff is being released for the Search Queries Report?

- Questions 3: 4:30 - Could Gmail be used to store Webmaster Tools legacy data?

- Question 4: 5.34 - Any updates on Penguin? & Does Penguin Crawl Sites?

- Question 5: 7:04 - Does HTTPS boost SEO Performance or Traffic?

- Question 6: 8.37 - Seroundtable Panda Penalty Discussion - Bad Quality Comments to blame?

- Question 7: 27:21 - How much weight does Google give to Page Content Booking Engine in rankings?

- Question 8: 28:51 - How long after publishing more quality content will it take before rankings improve?

- Question 9: 30:28 - Question about merging 2 websites together.

- Question 10: 32:05 - Can links from bad websites have a negative effect on my website?

- Question 11: 33:52 - If a site has bad links would good links help without using the Disavow Tool?

- Question 12: 37:25 - Question about Negative SEO and Disavowing links daily

- Question 13: 40:08 - Where does the Knowledge Graph take its data?

- Question 14: 41:09 - How do you explain the extremely small changes in search results in the last three weeks?

- Question 15: 41:46 - Use Keyword Based Traffic to Assess User Satisfaction?

- Question 16: 46:35 - Discussion about the “Wrong” type of traffic.

- Question 17: 50:04 - Question about Hidden Tabbed Content. Is tabbed content bad for SEO?

- Question 18: 52:05 - A question about posts with large header images.

- Question 19: 53:20 - Can you tell us more about what’s happening in Webmaster Tools next year

- Question 20: 54:57 - Does Panda or Penguin penalty trigger a mass crawl of your site?

- Question 21: 56:22 - Is Google Panda a penalty?

- Question 22: 58:10 - Discussion about an old penalty hit site’s chance to recover

- Question 23: 60:06 - Site Discussion - Indicates sometimes Google needs to change their algorithm

Transcript Commentary of the Google Webmaster Central Hangout 30 Dec 2014

Introduction - 0:06

JOHN MUELLER - My name is John Mueller. I am a Webmaster Trends Analysts at Google in Switzerland and part of my role is to talk with webmasters like you guys and to make sure that we answer your questions and that we take your feedback on board when we work on our products when we work on our search algorithms.

Question 1: 0:41 - Why do Indexed URLs in Sitemap Fluctuate in Webmaster tools, and are there any plans to indicate which URLs are not indexed?

LYLE ROMER : Real quick on a Webmaster Tools question. I noticed something on the site maps where sometimes where it says how many are indexed out of how many are in the sitemap, that will seem to fluctuate even though nothing has changed as far as the URLs that are actually in the site map. So I guess a two-part question– one - would you have any idea what causes that phenomenon, and two, might there be any plans in the future to actually indicate what items from the site map are and are not indexed?

JOHN MUELLER: It’s hard to say what could be causing that. My guess is that we’re just kind of dropping and re-picking up URLs. I could see that happening, for example, if you have a fairly large site. And sometimes we can pick up a little bit more of that. Sometimes we can’t pick up all of that. So those kind of fluctuations are kind of normal as we kind of crawl and index a site. With regards to getting information about the URLs that aren’t indexed, I can see that making sense for a smaller site. But for any larger site, there are going to be lots and lots of URLs that aren’t indexed and having those individual URLs isn’t really going to help you that much. So what I do there instead is split your site map file up into logical segments and just figure out which parts of your site map file are getting more indexed or which parts aren’t getting as indexed, and kind of on an aggregate level, look at it on your site. And say, OK… All the category pages are getting indexed, and that’s essentially the most important page for my website. So that’s fine. If not, all of the blog posts are getting indexed, and that’s something you can live with. If the important pages aren’t getting indexed, then that’s something you might want to think about what you could change in your site structure in general to kind of make it easier for us to recognize that those pages are really important.

_The Webmaster Commentary - _John Mueller is saying to split your Sitemaps up, but all the major sitemap plugins (for WordPress at least) will only let you divide your sitemap up by Post, Page, Category, Tag and in some cases Video. We use the Yoast SEO plugin that does exactly this. What might be more useful is to have a sitemap for each post category, so you can see whether any category is failing for the purposes of SEO but we could not find a WordPress plugin that did this automatically.

Having multiple sitemaps also has some benefits when it comes to crawling and indexing your site, and you can see an interesting case study on this here .

Question 2: 2:47 - What new stuff is being released for the Search Queries Report?

BARRY : John, since nobody’s around,could you share with us what fun stuff is coming out in the Search Queries Report in the Webmaster Tools?

JOHN MUELLER: Uh, not really.

The Webmaster Commentary: John Mueller went on a little here by simply stating that they are simply working to bring together all the information regarding search queries and view the parts relevant to your site but couldn’t give any details as it was in its early stages. Plus they try not to release many changes over the Christmas period. You can read more about the Search Queries Report here . Or if you only have a couple of minutes to spare you can watch the video below:

Questions 3: 4:30 - Could Gmail be used to store Webmaster Tools legacy data?

JOSH BACHYNSKI : Did you happen to get my email? I did have one feature request that I thought would be interesting. I thought, why not use Gmail data for extra data space to save our legacy Webmaster Tools search query data? Or what if we could give you FTP information? And we’ll gladly store it on our sites. If you need space, we have space, if that’s the issue.

JOHN MUELLER : Yeah. […] I don’t think that would work from a technical point of view, because the storage has to be quick enough to be queryable directly. So I don’t think that would make sense directly. But I did pass that on, and I think that kind of encourages the team to also see what we can do to bring more data there.

The Webmaster Commentary: This can cause a real headache when trying to find a reliable source of data for your website website, such as what backlinks are pointing to your site. Google has confirmed on their FAQ that not all the data is necessarily present, and that just because a particular link does not appear in Webmaster Tools doesn’t mean that Google doesn’t know about it. As a result people are often required to pay for third party services such as Ahrefs , or Majestic . If you are needing to disavow links, we do think CognitiveSEO is your best bet as they amalgamate links from all these sources and you can create the disavow file in minutes with their automatic bad link detection and disavow tool .

Question 4: 5.34 - Any updates on Penguin? & Does Penguin Crawl Sites?

JOSH BACHYNSKI : So I guess we’re obliged to ask about Penguin and if you have any new information.

JOHN MUELLER : I don’t really have anything new to share there, no.[…] This is also, I guess, one of the areas where the engineers are trying to take a break and see how things go at the moment, so no big changes happening there.

JOSH BACHYNSKI : […] Do you know– does Penguin crawl sites? I know Panda will do a site crawl sometimes. But I was wondering if Penguin was doing a site crawl, because I’ve been seeing really weird fluctuations in various websites’ crawl rates.

JOHN MUELLER : That would probably be completely separate from like an algorithm like Penguin or Panda. That’s something where usually there are technical signals that we pick up when we kind of re-crawl a site in a big way. And those are the kind of things where we look at what we’ve seen from the recent crawls and kind of recognize that something significant has changed. And then we’ll say, oh, well, maybe we should check the rest of the site as well. So it’s not so much that a quality or a webspam algorithm would say we should re-crawl this website, but it’s more like we see significant changes on this website, so we say, OK. Well, let’s take a look at what else changed.

The Webmaster Commentary: Actually there are a few reasons at the present time why you might not want to convert to a HTTPs based site. Apart from the relatively minor extra cost of an SSL certificate and perhaps a dedicated IP (although many hosts now support SNI technology which means a dedicated IP is not required for a SSL certificate) there are a few cases where HTTPs can harm your site or at least the income from your site.

One such example is if you display advertising on your site. The way many advertisements work is that the advertisers will bid, and higher payouts (Cost per click, or Cost per impression) will be made the more demand there is for that advert space. Unfortunately many advertisers do not submit HTTPS friendly adverts which means if you have a HTTPs site there will be less demand for your advert space and such your website revenue will decrease. In this one case the drop was a massive 75% in the Cost per Click .

Another reason you might not want to transfer to HTTPs is due to the fact many shared hosting companies that offer sophisticated technologies such as Varnish based Caching (eg. SiteGround ) do not support HTTPs. Whilst your website will still work on those hosting providers, the technology that speeds up your site will not.

Question 5: 7:04 - Does HTTPS boost SEO Performance or Traffic?

Written Submitted Question: Here we have a question about HTTPS. I checked a few websites that made this change, and I didn’t see a boost in their SEO performance or traffic. So why should we move our website to HTTPS if it’s not going to help?

JOHN MUELLER: So I think first off it’s important to keep in mind that this is a fairly small change at the moment. So it’s not something where you would see a jump from number 10 to number one immediately. And on the other hand, this is also something where I think the long-term trend is just going to be towards HTTPS. So in the long run, it’s not going to be something where users will go to a website and say, well, if only this website were available in an unencrypted, insecure way, then I’d love to visit it more often. Because there’s really no downside to implementing HTTPS once you have that set up. It’s something that helps your users, that helps you–because you know the content that you

8:04put online is the content that the users see. So it’s something where I think that the general move is just going to happen more and more in that direction. I imagine over time, you’ll see that this factor is something that we can improve on and where we can say, well, it really makes sense to kind of push this a little bit more so that we can perhaps count it a little bit higher in the rankings. So it’s something where I imagine over the long run, you’ll see a bigger change than you would see immediately at the moment.

The Webmaster Commentary: When this was first announced there was a mad rush by many internet marketers to implement SSL on their sites. We too… took the hint from Google setup SSL. SSL companies went crazy and there was a slew of blog posts claiming that SSL will increase your rankings. Since August 2014 when the announcement was made people have begun to sober up abit, and Google has (as you can see above) has played down the impact SSL will have on your site. Whilst it certainly looks it may play a bigger part in the future, just make sure you are aware of all the issues, especially if you display advertising on your site.

Question 6: 8.37 - Seroundtable Panda Penalty Discussion - Bad Quality Comments to blame?

The Webmaster Commentary: We will just briefly mention a few things before going into the rather long, but very interesting conversation between Barry and John Mueller. We think it helps to have our commentary at the beginning so it can set the scene for the conversation that follows. The first relevant post was back on the 2nd October when Barry Schwartz of the Search Engine Round Table (Seroundtable.com) queried whether he had been hit by Panda 4.1. If you ever read Barry’s site you will know that the posts tend to be short, but most posts attract quite a significant number of comments, often of low quality.

Barry has on several occasions discussed and invited comments at what might be causing his site to have been hit, and theories from Bad comments, indexed Categories Tags (with no value to the reader) and short content have been mentioned and discussed. We probably think that John Mueller has picked up the main issue, but we bet there is some old poor content, or indexed categories tags that shouldn’t really be indexed, so Barry might want to not get too focused on just one thing here.

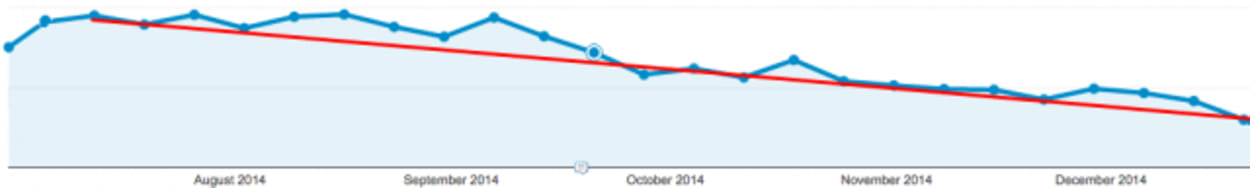

After Barry attended this Google Hangout, he then wrote this post , which he then took his interpretation on what John Mueller said to his readers. You can see the decline in his traffic coincided with the Panda Penalty below:

BARRY : John, can I ask about my own site?

JOHN MUELLER : Sure.

BARRY : So I assume you’ve seen some comments I’ve made or some people have made on the downward trend in traffic on the [INAUDIBLE]. And then somebody was like, what are you doing, what am I doing to improve things on the site to increase the traffic? And I kind of made like a joke that I’m trying to figure out how to become an authority in SEO. I’m trying to write content that people want to hear and people haven’t heard other places, and I’m trying to make sure that people enjoy reading the site. So as you know– I’m pretty sure you know. I mean, it looks like I was hit by Panda. I mean, I don’t know if you can confirm that or not.

JOHN MUELLER : I haven’t actually looked at your site recently,so I don’t really know which part of the trend you’re looking at.

BARRY : OK. […] But I mean, I don’t know what to do. Honestly, I don’t care about the little technical issues.

JOHN MUELLER : Do you have old content?

BARRY : Of course I have old content that’s dated. It’s a news site that’s 11, 12 years old. So I’m sure some of the stories that have from 11 years ago about page-writing updates or monthly Google crawls and stuff like that, that’s outdated,but yet, it’s relevant back then. I’m not going to go ahead and delete historical content because it’s dated.

[Barry goes on a bit about this, about his wiki page and about how he won’t remove old content because it is no longer relevant]

JOHN MUELLER : So I guess in general I wouldn’t worry too much about the dated content, and say, this is– I don’t know. Google made this change five years ago, and it’s made 17 changes in that part of the algorithm since then, so it’s no longer relevant. I wouldn’t worry about it from that point of view. In general, I also wouldn’t look at it as too much of a technical issue if you’re looking at Panda issues.

JOHN MUELLER : But instead, I’d look at, I guess, the website overall, the web pages overall. That includes things like the design,where I think your website is actually pretty good compared to a lot of other sites. So from that point of view, I see less of a problem. But it also includes things like the comments. It includes things like the unique and original content that you’re putting out on your site that is being added through user-generated content– all of that as well. So I don’t really know exactly what our algorithms are looking at specifically with regards to your website.

JOHN MUELLER : It’s something where sometimes you’ll go through the articles. And you’ll say, well, there’s some useful information in this article that you’re sharing here. But there’s just a lot of other stuff happening on the bottom of these little posts, where when our algorithms look at these pages in an aggregated way across the whole page, then that’s something where they might say, well, this is a lot of content that’s unique to this page, but it’s not really high-quality content that we’d want to kind of promote in a very visible way. So that’s something where I could imagine maybe there’s something you could do.

JOHN MUELLER : Otherwise, it’s really tricky, I guess to look at specific changes that you could do when it comes to things like our quality algorithms.

BARRY : So you’re telling me to look at the comments.

JOHN MUELLER : Well, I think you have to look at the pages in an overall way. You shouldn’t look at the pages and say–I mean, we see this a lot in the forums. For example, people will say, well, my text is unique. 1You can copy and paste it, and it’s unique to my website. But that doesn’t make this website, this page, a high-quality page. So things like the overall design, how it comes across, how it looks as an authority– kind of this information that’s in general to a web page, to a website, that’s something that all comes together, but also things like comments. Where a webmaster might say, well, this is user-generated content. I’m not responsible for what people are posting on my site.

[Continues to confirm it is the page overall, not just the main content]

JOSH BACHYNSKI : Hey, John. […] What about the ads? Sometimes I notice there are ads on Barry’s site that seem to me to be a little off-topic.

JOHN MUELLER : I hadn’t noticed that. At least speaking for myself, personally, it’s not something that I noticed. And if these ads are on these pages and they’re essentially not in the way, they don’t kind of break the user experience of these pages,then I see no real problem with that.

[Continues on to say it would be more tricky if the adverts were adult orientated]

MALE SPEAKER : Hey, John […] Barry’s site is one of those trusted sites among SEO communities. I mean, it’s just right up there. And how could something that big be suppressed, and to me, I’m obviously a non-Googler. It doesn’t look like Panda based on the content he has and all the community he’s built around it. Is there anything you can give to Barry that could possibly 0point him in the right direction of recovering whatever that drop actually was?

JOHN MUELLER : I’d have to take a look at the details of what specifically is happening there. So it’s not that I have anything magical where I can just like pop a URL in and say, oh. Well, this line of HTML needs to be changed, and then everything will be back to normal. To some extent, things always change on the web. So you’ll always see some fluctuations. I imagine that kind of plays into this as well. But I guess looking at the site overall, I do see that it’s a great website. It brings across a lot of really timely and important information. So it’s not something where I would say, offhand, this is something that we would see as low-quality content.

JOHN MUELLER : But sometimes there are elements that are kind of a part of that overall image that also play a role, where we’d see like the primary content is really high quality. Maybe there’s another part of the page that isn’t that high quality, and that kind of makes it hard for our algorithms to find the right balance and say, OK. Overall, this page is 70% OK, 60% OK, 90% OK–this kind of stuff.

MALE SPEAKER : Well, I’m just curious for really any website, not just Barry’s. I mean, all of us, I believe, except for you, John, are non-Google employees. What access do you and your team have back there to be able to really assess what’s going on with the site, good and bad?

JOHN MUELLER : We have a lot of things.I mean, on the one hand, we have to kind of be able to kind of diagnose the search results and see what’s happening there. That’s something where we have to figure out which teams we have to contact internally to kind of find out more about this, to escalate problems that we see. So we do have quite a big insight into what’s happening in search. And we try to kind of refine the information that we have into something that the webmaster can use, something that we can say publicly.

[Continues to explain that algorithms can change, and that they need to tell users a general direction rather than specific things which might not be relevant in the future]

MALE SPEAKER : Hi, John. Previously you mentioned in regards to comments that if an article was a considerably long, original article, and then there was relatively few comments, then they may have little to no bearing on the ranking. Whereas a short article, however good the article may be,that has a great deal of comments, may have to be a lot more concerned about that. So that’s something about some of Barry’s site’s articles that are– they’re original and knowledgeable content, but they may be like news flashes about a specific topic, so they are short and have a lot of comments. So do you think that’s kind of a potential area that you previously referred to?

JOHN MUELLER : I think that’s something you could look at if you wanted to look at it in a specific way like that. But in general, I just see comments as something that’s a part of the pages that you’re publishing. So if you look at the page overall and you see,well, this is the part that I wrote. This is the part that other people contributed. Then, of course, depending on the sizeof your page in general, that’s something where a large part of the content that you’re publishing is either contributed by other people or written by yourself. So it kind of automatically falls into that area that you talked about, where if you have a short article with a lot of comments, of course those comments are going to be a larger part of your page.

JOHN MUELLER : So that’s something we’ll pick up, as well. So it’s not something where I’d say you have to watch out more for your comments if you have short content. But just generally keep in mind that what you’re publishing is a whole page. It’s not just that specific article that you have on top, but actually the whole page that you’re providing to the search engine.

BARRY : You’re not saying that Google doesn’t understand the difference between the primary content versus the comments on the content. The algorithm does understand the difference, correct?

JOHN MUELLER : We do try to figure that out. And for some sites it’s easier. For some sites it’s not that easy. But still it’s something that is a part of the content of the page. So it’s not that we would say, well, these are comments, therefore we’re going to completely ignore them, or these are comments, and therefore we’re going to kind of 90% ignore them. They’re essentially a part of the page that you’re publishing for us.

BARRY : OK. And going back to the comments themselves– so, at least in my case, I watch the comments. I have Disqus. It does a lot of the filtering of the pure spam stuff. It lets some things through. I have three different queues on howI actually monitor and block spam. I’m pretty good at it. So spam is clearly something that’s not there. Maybe there’s some that creeps through.There’s obviously a lot of people who comment on my site that are impacted by certain algorithms, and either they’re upset by it, or they’re not– whatever. They write them fast, just I like I write my stories fast. And sometimes the grammar and the English and stuff like that doesn’t really come out that well. I’m not going to go ahead and rewrite their comments. Do you think maybe I should? Should I rewrite people’s comments to make it spell better or just sound better? And a lot of these things is like, it’s a lot of people helping each other in the comments, like you would in a discussion forum. I could easily quickly block all the comments, which I don’t want to do, because it’s really part of the whole purpose behind the site.But again, what would you recommend in that case?

JOHN MUELLER : It’s hard to say. So I’d kind of have to look at your site in general to kind of figure out what I’d recommend there. I think definitely monitoring the comments for spam is something that should definitely be done. It sounds like you kind of have that covered. How you handle lower quality comments– I guess it’s tricky sometimes. And it’s something where I don’t have any default answer where I’d say you should do this or you should do that. I think rewriting other people’s comments probably doesn’t make that much sense. But maybe there are ways that you can kind of bubble up the higher quality comments and kind of not put the lower quality comments in front and center. I realize that sometimes they’re really tricky, especially when you’re looking at something where people are obviously very emotional about specific changes, for example. That could mean that there’s some, let’s say, ranty comments out there that are really popular by the people but actually that don’t bring a lot of value to the article itself. So finding a balance between bringing them front and center and making sure that the actual high-quality content is front and center from your pages is something where you kind of have to find that balance yourself.

BARRY : OK. I’m just trying to go by the rule that I tell people– forget about SEO, per se. Obviously do everything technically right according to the technical requirements and stuff like that. But really, when you build your website, make sure it’s something that people want, people want to read it, and make sure that it’s something that Google would be embarrassed not to rank well in the search results.

[Further discussion that just goes over what has been said before]

JOHN MUELLER : Yeah. […] But another thing that I’ve seen some sites do is bring only a part of their comments to the main page, and move the bulk of their comments out to a separate URL, for example. I don’t know how much of that would be possible. I don’t know how problematic those comments are in general over time.

Question 7: 27:21 - How much weight does Google give to Page Content Booking Engine in rankings?

MALE SPEAKER : Hi, John.

JOHN MUELLER : Hi.

MALE SPEAKER : Talking about quality and high-quality content, in the e-commerce space, we are building a [INAUDIBLE], and we work in a very competitive field. So I wanted to know how much weight has the content of your pages, the high-quality content of your pages, and the high quality of your search engine or booking engine, in order if it’s different than the others, or bring more quality results for our visitors. I’d like to know if it has some weighted one, or is it only about quality content?

JOHN MUELLER : It kind of comes together. So if the system behind your website doesn’t work that well, then essentially it kind of generates low-quality content.So that’s something–

MALE SPEAKER : I was talking about the results for the people. If we bring different results of other booking engines, and they are more with more information or more quality information for the user– not inside our machine. Only talking for the users.

JOHN MUELLER : Well, that’s essentially content. […] Whether it comes from your database and your algorithms that you use to compile that information or if that’s a page that was manually written, that’s essentially content that you’re providing on those pages.

The Webmaster Commentary: This comes down to the Google Webmaster Tools Quality Guidelines . Google will not say specifics, but when it comes to thinking about what Google wants just think “Quality Original Content”. So if all you do is aggregate data from another source just think if Google would want to show your page, or the original source. To show your page it would need to have added value. Of course, Google’s algorithms are not black and white and indeed have different shades of grey, so it is always good to refer back to those guidelines as well as looking at your competitors sites and asking “Which would I rather read?”.

Question 8: 28:51 - How long after publishing more quality content will it take before rankings improve?

MALE SPEAKER : And I’d like to know– if we are doing a lot of more quality content in all of our websites, I’d like to know, if we started today publishing it, how much time I need to wait to have improved our rankings? We work in Spain, much or less.

JOHN MUELLER : Yeah. There is actually no fixed time for something like that. So we would probably re-crawl it fairly quickly, if you submit it in a site map or with a feed. We would probably show it up in our index within a couple of days, depending on how much content, what kind of site it is. So we would know about it fairly quickly. And from that point of view, we would be able to show it in the search results, then. So I would guess within a couple of days you would see that we would show that in search, but it’s hard to say when that content would have an effect overall on your website’s ranking. Because that’s something that just takes a lot more time to kind of aggregate that information, and to kind of turn that into a general positive signal for your website where we’d say, well, overall, this website has gone from having very little high-quality content to a lot of high-quality content, and that’s something where I would imagine you’d be looking at more of a time frame like maybe a half a year, or something like that.

The Webmaster Commentary: A couple of points we feel needed to be added here. Firstly you can decrease the time it takes to get new posts index by using the Google Fetch feature in webmaster tools . As an example, when we use it on this site for new posts the new posts are indexed within a couple of minutes. Secondly, we don’t believe it is a simple answer of it taking 6 months to get rankings increases after publishing more quality content, although we suspect John Mueller is talking algorithmically with the site as a whole, perhaps in reference to the Content Quality Penalty Panda. For instance the Freshness Factor does play an important role in things as well, and there are more indirect consequences as well. Good quality content is more likely to attract natural backlinks too , which can also attract direct referral traffic too. However, it is useful to know that when significantly upping the game of your website by really focusing on quality, the big gains can take 6 months. Much longer than we thought.

Question 9: 30:28 - Question about merging 2 websites together.

MALE SPEAKER : And a little more question. I have a website. It’s only a one-page website. And I’d like to merge with other websites in order to keep the backlinks and the content. How could I do this? If I want to merge this one site with one page to the other website, but the page already exists?

JOHN MUELLER : So you’re combining two websites?

MALE SPEAKER : Yeah, but no creating a new web page, but merging it with an existing web page, if it’s possible?

JOHN MUELLER : Sure. I mean, one thing you can do is redirect one of the URLs to the other one. And that helps us to combine the signals that we have for those pages. And then we can re-crawl and re-index that new page that you have based on whatever is there. So redirecting pages and combining them like that is something that I think is a normal part of the web. It’s something that always happens, where you refine your content. And you say, well, I had five pages, and I combined them into three. Or I combined them into one page, and I make that page much better than those five individual pages. And that’s a normal part of the web. So I wouldn’t worry about doing that kind of maintenance. I think that’s completely fine.

The Webmaster Commentary: We would add a word of caution when redirecting one page on a site to another site. We know that Google does not like Site-wide backlinks as they are not natural, and potentially will fall foul of the Google Penguin Penalty . Well, say you have the page you redirected to the new site linked in the sidebar or menu of the old site. Essentially (and we speak from experience here) is that Google will count every one of those links in the sidebar or menu as a backlink… all linking to the new redirected page. Obviously in the example above this won’t be relevant as we are talking about just a few pages being involved, but it is worth taking into consideration if you intend to redirect the odd page from a much larger site.

Question 10: 32:05 - Can links from bad websites have a negative effect on my website?

WRITTEN SUBMITTED QUESTION : Does natural links from domains like directories with additional values like a blog forum on the same domain or other websites with many unnatural outbound links can be bad for my rankings? Google flagged bad link, bad pages, or bad domains. So I think this question is can links from bad websites have a negative effect on my website?

JOHN MUELLER : And in general, that’s something where I’d say no. That doesn’t have a bad effect on your website. If we know that a website, for example, is selling links, then that’s something where we could say, well, we’re just going to disregard all of the links from this website. So that’s something where, in general, that’s not going to cause any problems. On the other hand, if the majority of your links are from sites that we would classify as being problematic, then that can look a bit fishy to our algorithms, where it looks like, well, all of your links are just from directories, and none of your users are recommending your website. Then maybe there is something problematic. Maybe there is something that you need to look at there. So in general, I’d say no. Having individual links from sites like that is not a problem. If you see that all of your links are like that, and especially if you have kind of done this yourself, if you’ve hired a SEO to create those links, then that’s something that you might want to clean up as well. So that’s something where cleaning it up with a disavow tool can help. So you could try that, as well.

The Webmaster Commentary: Basically John is saying you don’t have to worry unless your backlink profile is unnatural. Everyone has a few bad links, but if your backlink profile wholly consists of bad backlinks then you have something to worry about. You can read more about the Google Penguin Penalty here . Essentially if you are worried, then you can use the Disavow tool which we mentioned earlier in this article. Another alternative, which is not really recommended by John Mueller, is to focus on building good quality backlinks. We think this will just get you in that grey area, so the best way to proceed is obviously to attract high quality backlinks and also disavow the poor quality ones.

Question 11: 33:52 - If a site has bad links would good links help without using the Disavow Tool?

MALE SPEAKER : Let’s take a hypothetical situation where a webmaster doesn’t know about the Webmaster Tools disavow tool, and the majority of his links are directories or websites selling links, and is obviously affected by the Penguin penalty. Meanwhile, he goes ahead and gets some good-quality links, and the percentage of low-quality links changes–gets smaller. But again, he doesn’t use a disavow file or anything else. Would this help him– so if the majority of the links become the quality links, would this help him remove or would Google robot remove the Penguin penalty?

JOHN MUELLER : That would definitely help. Yeah. So, I mean, we look at it on an aggregated level across everything that we have from your website. And if we see that things are picking up and things are going in the right direction, then that’s something our algorithms will be able to take into account. So in the hypothetical situation of someone who doesn’t know about any of this and they realized they did something wrong in the past and they’re working to improve that in the future, then that’s something that our algorithms will pick up on and will be able to use as well. Still, if you’re in that situation, it wouldn’t be that I’d say you should ignore the disavow tool and just focus on moving forward in a good way, but instead really trying to clean up those old issues as well. And it’s not something where we’d say that using the disavow tool is a sign that you’re a knowledgeable SEO and that you should know better about these links. It’s essentially a technical thing on our side, where we don’t take those links into account anymore. It doesn’t count negatively for your website if you use a disavow tool. It’s not something you should be ashamed of using. If you know about this tool, if you know about problematic links to your site, then I just recommend cleaning that up.

MALE SPEAKER : […] I mean, would the penalty actually get removed if the majority of the percentage of low-quality links diminishes? The actual Penguin penalty– would it be removed?

JOHN MUELLER : Yeah. That’s something that our algorithms would take into account– where if they look at the site overall and they see that this is essentially improving, if it looks like things are headed in the right way and the important links are really good links that are recommendations by other people, then they’ll be able to take that into account and modify whatever adjustment there was made with that change there on that website. So they would take that into account. I wouldn’t say that you have to have more than 50% and then the algorithm will disappear for your website. Let’s say there are lots of shades of gray involved there, where the algorithm could say, well, this is looked really bad in the beginning. They worked a lot to kind of improve things overall. Things were improving significantly across the web with lots of good recommendations for this site. So it’s kind of headed in the right direction. So it wouldn’t be that it disappears completely, but maybe it’ll kind of step-by-step improve.

MALE SPEAKER : OK, thank you.

Question 12: 37:25 - Question about Negative SEO and Disavowing links daily

MALE SPEAKER : John, if I can jump in just to follow-up on his last question.

JOHN MUELLER : Sure.

MALE SPEAKER : Regarding the disavow, I’ve posted a bunch of– probably Barry’s site, as well. People know me as the guy that disavows twice a day. I’ve been having to– I do it in the morning and at night, because we’re still undergoing a negative SEO attack– I should say a negative link spam attack on the site. How will we ever know if the disavow tool is actually working? I only ask that because a month after the attack started,

38:00our trajectory was going– let’s see if I can do this perspectively right– straight up, and we are literally just down one month after. And I was disavowing twice a day since day one. We have probably 1,700 domains disavowed. We only have like 800 and some actual domains to our site– legitimate linking domains. Basically, what should we do, keep disavowing and hope it’s going to work.

JOHN MUELLER : You sent me that out on Google+ yesterday, right?

MALE SPEAKER : Yes.

JOHN MUELLER : Yeah. So I looked at your site briefly, and there was really nothing from those problematic links causing any problem. So that’s something where if you’re seeing negative changes, then they wouldn’t be resulting from those bad links that you’re seeing there. So either you’re handling the disavow properly, or we’ve been able to kind of ignore those links anyway. So from that point of view, I think you’re probably doing the right thing by cleaning up these kind of issues as you see them pop up, but I wouldn’t assume that all of the changes you’re seeing in search are based on those links. So things like looking at your site overall to see what you can improve in general– that’s something I’d still keep in mind. And that’s something I’d still focus on, as well.

[Discussion continued by saying don’t just concentrate on links, but on the site overall]

The Webmaster Commentary: As someone who has in the past been hit by a negative SEO attack this is exactly what we did, and we were never hit by the recent Penguin Penalty updates. We used Cognitive SEO (it is quite pricey at around $99), but it does simplify the process in diagnosing bad backlinks, whilst identifying many of your backlinks using data from Moz, Majestic and Ahrefs (no one service will find them all) as well of course allowing you to import your Webmaster Tools Data.

Question 13: 40:08 - Where does the Knowledge Graph take its data?

WRITTEN SUBMITTED QUESTION : From where does the Knowledge Graph take its data? Google’s not showing the Knowledge Graph of my website. What can I do in order to appear with a Knowledge Graph?

JOHN MUELLER : For the Knowledge Graph, I think we use a variety of public data that’s available. And that’s something that we show algorithmically. It’s not something that we’d show for every website, for every query. So just because that information is available doesn’t mean that we’d always show it for your website, as well. So with that in mind, what I’d recommend doing is just primarily focusing on your website and making sure that people are seeing it as something important, that they’re writing about it, that they’re talking about your website, that we do have this additional information to back up that it would make sense to actually show a Knowledge Graph entry for that website.

The Webmaster Commentary: This is about the best article we could find on the Google Knowledge Graph, and how you need to start thinking semantically when writing your articles rather than in terms of keywords. We quote from the article:

The Knowledge Graph was built to help with that mission . It contains information about entities and their relationships to one another – meaning that Google is increasingly able to recognize a search query as a distinct entity rather than just a string of keywords.

Question 14: 41:09 - How do you explain the extremely small changes in search results in the last three weeks?

WRITTEN SUBMITTED QUESTION : How do you explain the extremely small changes in search results in the last three weeks?

JOHN MUELLER : I don’t really know what specifically you’re kind of pointing at there, but fluctuations are essentially normal. And these are things that always happen with our algorithms, even if there is nobody manually flipping switches at the moment. So these are the kind of fluctuations that always can happen– my guess. I don’t really know what specifically you’re pointing at. Maybe there’s also technical issues that are sometimes involved on these websites.

Question 15: 41:46 - Use Keyword Based Traffic to Assess User Satisfaction?

JOSH BACHYNSKI : So you said a couple times in the past that in terms of the quality algorithms, the traffic we should be looking at in terms of their satisfaction should be mostly keyword traffic. And you’ve also said to me before that organic traffic is most important to look at in terms of how satisfied they are. Is that still the case? Should we still be focusing mostly on the keyword-based traffic?

JOHN MUELLER : I’m not really sure what you’re referring to there.

JOSH BACHYNSKI : Not in terms of I know you guys don’t use the Analytics bounce rate or anything in the Analytics package. But in terms of our seeing it from our perspective, what traffic do you think we should focus on there to make sure that they’re satisfied? Because you used to say keyword traffic, or organic-based traffic. I was just wondering if we should extend that to all traffic now, or–

JOHN MUELLER : I don’t know what I could best recommend there. So my suggestion would be to really take into account anything that you have available to kind of understand how happy users are with your website. So that’s something where you can take into account whatever metrics you have available on your side, which could be based on analytics. It could be based on social mentions. It could be things like +1’s or thumbs up, thumbs down– those kinds of things on a site. I wouldn’t focus on any specific metric from that point of view. So anything that you can do to figure out which parts of your site are really well-received by your users, where they think this is great content, and which parts of your site are not so well-received by your users, that’s the kind of thing where I would focus on. That’s the kind of thing where our algorithms should be able to pick that up on, as well. So instead of focusing on specific metrics and saying only people coming through with keyword traffic from organic search results are the ones that we need to make happy– I’d really think about what you can do overall on your website to find the parts of your site that work really well, improve the parts that don’t work so well, and make sure that everyone who kind of comes to your site leaves as a happy user and is willing to kind of recommend your site to other people, as well.

JOSH BACHYNSKI : So it makes more senseto take a broader approach and look at everyone and make sure everyone is satisfied with the content. For example, recently I had a client– we did a TV spot, and we had a landing page for the TV spot. And we didn’t robot it out or anything. Maybe we should have. But we had thousands of people coming to this one landing page, and then they bounced right away. And some went into the site, some didn’t. Let’s just say it was really low-quality traffic in the sense that they were very uninterested. But we did get thousands of people through. So that wasn’t organic traffic, but we don’t know what Google’s tracking there to manage satisfaction. And we’re not sure if we should be worried about that. Should we?

JOHN MUELLER : I wouldn’t worry about that from an SEO point of view. I think it helps a lot to understand your users and kind of which parts of your site work well and which parts don’t. And indirectly, that’s something that we would try to pick up on, as well. But it’s not that we primarily look at the users coming to your website and say, oh, these all typed the URL that was in a TV spot. And therefore, they’re not as relevant or they’re really relevant. It’s something where I think internally you get a lot of information out of that, and indirectly, that can lead to us recognizing which parts are relevant on your website. But that’s not something where we’d see a direct relationship between the traffic that you drive to your website through a TV spot and how we would be able to rank that, or that site in general, or maybe a related site to that. So a lot of times people have a different domain for a TV campaign because they want something short and memorable that has a specific landing page, and that’s not something we’d be able to take into account from search anyway. So if these are things that are outside of search, then that’s not something where I’d say there’s a primary direct effect available for us to even look at there. But it does always give you more information about how people are looking at your site, and maybe how other people are looking at your site, and which parts you could improve on, which would, then, be something that we’d be able to kind of look into.

Question 16: 46:35 - Discussion about the “Wrong” type of traffic.

JOSH BACHYNSKI : That actually kind of goes back–dare I say– to the comments question, because [LAUGH] there’s a quality of traffic, right. There’s very interested traffic that’s going to love your site, or have a better percentage chance of loving your site, and then there’s traffic which is at best uninterested and at worst cruel. And so who do we have to cater to? You know what I mean? Sometimes it doesn’t make any sense to try to cater to that second group, because they’re just a bunch of curmudgeonly people anyway.

JOHN MUELLER : Good question. Yeah.I don’t really have a direct answer for that one. Yeah. So I think this is something where it’s always a balance between which people we send to your site through search and how you respond to that. And sometimes you might say, well, Google is sending me people who I don’t really want on my site because they’re all idiots, or because they actually don’t like what I’m publishing, and I prefer to have people who kind of like what I’m publishing. And that’s something where– at least at the moment, there’s no direct feedback loop where you could say, hey, Google, stop sending me these types of people, and send me more of these types of people. So it’s kind of a tricky situation there. But I don’t really have any, let’s say, direct answer for that.

[Discussion continued with one suggestion saying write more specific content, rather than generic content that might get more diverse traffic]

Question 17: 50:04 - Question about Hidden Tabbed Content. Is tabbed content bad for SEO?

MALE SPEAKER : Hi, John. I have a question about page layout.

JOHN MUELLER : OK.

MALE SPEAKER : I’d like to know– I’ve seen some content organized with tabs with CSS. In order to hide some tabs, and showing only one of them, like the tabs that are seen. Is it a good solution or a bad solution in order to show a lot of content without making a truly big website, web page? What do you think about?

JOHN MUELLER : There are kind of pros and cons there. On the one hand, if the user is searching for something that’s in one of the tabs, then they’ll be kind of confused if they land on this page. And they’ll say, well, I searched for this, but this page is showing me something about something slightly different. So that’s something that our algorithms do try to take into account, where they say, well, this is the visible content. And this is additional content on the page that’s not immediately visible. So we’ll be able to say, well, maybe this content isn’t that relevant for this specific page because it’s not really visible by default. So for content that’s really critical for a page, where you would say users are searching for this and they want to find this, I’d recommend using separate URLs for those specific tabs. If this is just additional content that you’re providing on those URLs which just provides a little bit more context, which maybe gives the user a chance to submit a form or something like that, then that’s something that I think is perfectly fine to be used in tabs. So kind of think about does it make sense to put this on a separate URL, or is this something that I think users might want to open if they want to kind of get that information but they’re not primarily looking for that information. So kind of make a decision based on that.

The Webmaster Commentary: This is one that actually caught us out when we used a Review Plugin (they subsequently changed how they displayed the reviews after we brought it to their attention ) that stored the reviews in tabs. It was only after the loss of some rankings that we discovered by chance that the reviews left on our site were not indexed in Google. The wider public caught on to this around November 2014, and you can see a couple of posts on the issue here and here .

Question 18: 52:05 - A question about posts with large header images.

MALE SPEAKER : Now it’s very typical having big headers with big images, like that you can see in media websites. And most of the content is below the fold, or mostly below the fold. What do you think about that? Because pretty nice design, but I don’t know what Google thinks about that.

JOHN MUELLER : That’s usually fine. That’s not something where I’d say you have to worry about that. I’d only worry about something like that if the images are really unrelated to the primary content. So if the image is an ad, for example, if it’s something from another website, if it’s something pointing to other articles on this website, then the user will land on that, and they’ll be kind of lost. But if the image is a part of the primary content where you say, going to this page and kind of having this nice header image on top encourages the user to kind of read the rest of the page, and it all matches the same general topic, the primary content, then that’s something that is perfectly fine. That’s not something I’d discourage.

The Webmaster Commentary: This is quite interesting, as we removed our large full width header images a few months ago fearing this would be something Google Panda took into account. In any event, we actually prefer having a small image right aligned on our posts as it allows our readers to get to the text faster. That has to be important right? Well, apparently Google does not think its that important.

Question 19: 53:20 - Can you tell us more about what’s happening in Webmaster Tools next year

WRITTEN SUBMITTED QUESTION : Can you tell us more about what’s happening in Webmaster Tools next year?

JOHN MUELLER : We try not to preannounce too many things, so I don’t really have that much more to let you know about that there. I think you’ll see more and more happening with mobile. That’s something that I believe is a really important topic. That’s something that I think a lot of websites still aren’t getting completely right. So that’s obviously one area that you’ll see more happening there. We also talked about the Search Query Report, I believe, in one of the last Hangouts, where we’re working on some changes there. So I’d imagine in the course of the year you’d see some changes there. But lots of things can still happen, and depending on the feedback that we get from you guys, obviously we can kind of tailor the future as well and say, well, this is a more important topic than other things, so we should focus more on that. But otherwise, I don’t really have anything interesting that I could preannounce for you guys. What I’ll try to do where we can do that is to kind of provide beta versions for people if we have something that we’d like users to try out before we make it into a final version. I don’t know how easily we’ll be able to do that with anything that’s immediately coming up, but I think that’s something that’s always really useful for us. So we’ll try to do that where we can.

Question 20: 54:57 - Does Panda or Penguin penalty trigger a mass crawl of your site?

BARRY : John, at the eight-minute mark, Josh asked you about Googlebot being triggered to crawl more of a site based off of an algorithm. If that’s the case– that Googlebot can be triggered, say, crawl more based off of the Panda algorithmor based off of the Penguin algorithm– that happens?

JOHN MUELLER : I don’t think we do that. I don’t think we do that based on those specific algorithms. Obviously, we have lots of algorithms, and some of these are algorithms specifically for crawling and indexing. So if we see, for instance, specific changes on a site, then we might start re-crawling that. And that’s obviously something where if you make bigger quality changes on your website, then we’ll see that and say, well, lots of things have changed on this website. Maybe we should take a look at it a little bit better. So maybe there is some kind of correlation there that, if you make specific changes on your website, then we’ll start crawling more to kind of recognize those changes. But it wouldn’t be specific to the quality algorithms where we’d say, well, your site has– I don’t know– some Panda on it, therefore we’ll crawl it more frequently or we’ll crawl it less frequently. It’s usually more of a technical issue where we say, well, things have changed on this website, therefore we should be crawling it to make sure that we pick up those changes.

The Webmaster Commentary:

Question 21: 56:22 - Is Google Panda a penalty?

MALE SPEAKER : John, a quick follow-up on Panda in general. I was actually asking in the group chat, as well. Would Barry’s issue be something like a penalty? So would you consider Panda actually a penalty? Or do you see a problem with the website–like, the website has an issue, so we’re going to demote it until the issue is resolved?Or something like the comments change how Google understands the content of the page and says, this page isn’t relevant for these keywords anymore. We think it’s relevant for something else, so we’re going to demote it because of that.

JOHN MUELLER : Yeah. So we don’t see the Panda or the Penguin algorithms as penalties, internally. We see these as essentially part of our search quality algorithms to kind of figure out which pages are relevant to specific queries. So that’s not something where we’d say we’re penalizing a site for being low quality. But rather, our algorithms are looking at these pages and saying, well, overall, maybe we were ranking this site or these pages in a way that we shouldn’t have in the past, and we’re kind of adjusting that ranking. Or we’re adjusting the ranking so that we can make sure that other pages that we think might be higher quality content are more visible in the search results. So it’s not that we would see this as a penalty, but rather as something just a part of our search algorithms that adjust the ranking based on the perceived relevance for these individual queries.

MALE SPEAKER : OK. So basically most are a relevance problem rather than the site is bad, there’s something wrong with it, problem.

JOHN MUELLER : Yeah.

MALE SPEAKER : Interesting.

The Webmaster Commentary: Well, they can call it whatever they like, but we and many others still think it is a penalty! But of course, it is only natural Google will take the less controversial term “re-adjustment”.

Question 22: 58:10 - Discussion about an old penalty hit site’s chance to recover

LYLE ROMER : Hey, John. For the last question of the year– from me,at least– if somebody basically is competitive, highly ranking on competitive keywords and getting good natural links along with the other people in the space, and then that site is suppressed by one of the quality algorithms for a year, a year and a half, two years, at which point during that period of time they’re not gaining those organic links because they’re not able to be found, once they’re no longer suppressed and they’re back kind of near the top, is it ever possible for that site to kind of catch up with the natural high-quality links of the other sites that were never suppressed?

JOHN MUELLER : Sure.Yeah. I don’t see a problem with that. So I mean, things always change on the web. So if you’re talking about a period of more than a year, than lots of things will have changed. Sites will have changed. The way these sites function sometimes changes. And if your site is the same as it was five years ago, in an extreme case, then even if we kind of took out a manual action and made the site rank completely organically again, it wouldn’t show up in the same place in the search results in the same way as it did five years ago. So over the course of one year, things change quite a bit. Over the course of multiple years, they’ll change quite a lot. So obviously things will change during that time. But that doesn’t mean that the site is kind of stuck and kind of lost forever. It can become just as relevant as all of the other sites out there. It’s just a matter of making sure that it works well, it’s something that users want to find, it’s something that our algorithms think belong in the search results, and we should show it more prominently.

The Webmaster Commentary: Well John Mueller in a previous Hangout has already said this:

If you’ve had a manual action on your website and that’s been revoked, then essentially there’s no bad history attached to your site . It’s not harder to rank anymore….It’s not the case that there is any kind of a grudge that our algorithms would hold against a site that has had a manual action.

If you have disavowed links, then it is always possible that you have removed some that Google was still counting as positive, although Google would like you to think that bad links had already been discounted anyway. For a detailed commentary about this you can check this post . We would just remind you not just to focus on getting rid of bad links to recover, but generally review your site’s content for needed improvements (the quality algorithm is constantly changing) and also to attract high-quality links by created high quality content.

Question 23: 60:06 - Site Discussion - Indicates sometimes Google needs to change their algorithm

MALE SPEAKER : John, since we’ve just finished on the sites that haven’t ranked for 18 months to two years question, is there any chance you can give me an update on what’s happening with ours?

JOHN MUELLER : I know some engineers are looking at it.

MALE SPEAKER : We saw a very slight increase in mid December, but we’re a gift company. So obviously even if we’re suppressed, you’ll still have a seasonality for normal customers, because normal customers know us, and they will search for us specifically, our brand. And we’ve had, over the last 10 years, 100,000 customers. So there’s going to be some just coming back anyway, no matter what Google does. But we did see a slight jump in the middle of December in terms of organic visitors and there was a shift, but it’s now going back to normal. So I don’t know if even you can’t tell me if something’s shifted, whether you can tell me whether that was seasonal and/or if there was something you can see there as a change. And that was the site with the E rather than the X.

JOHN MUELLER : I’d have to take a look at the details there. […] I don’t have anything specific. I know the engineers said they would look into your specific case to see what was happening there. And the last I heard, they were kind of looking at that as something that we need to improve on our side, as well. So from that point of view, I think it’s on the right track, but I don’t really have an update on how things will change there.

[Conversation continues for a minute or too, but no new information]

The Webmaster Commentary: Well, it just goes to show it can be worth sending John Mueller a message on Google+ ( https://plus.google.com/+JohnMueller ) if you think something is not quite right with the Rankings.